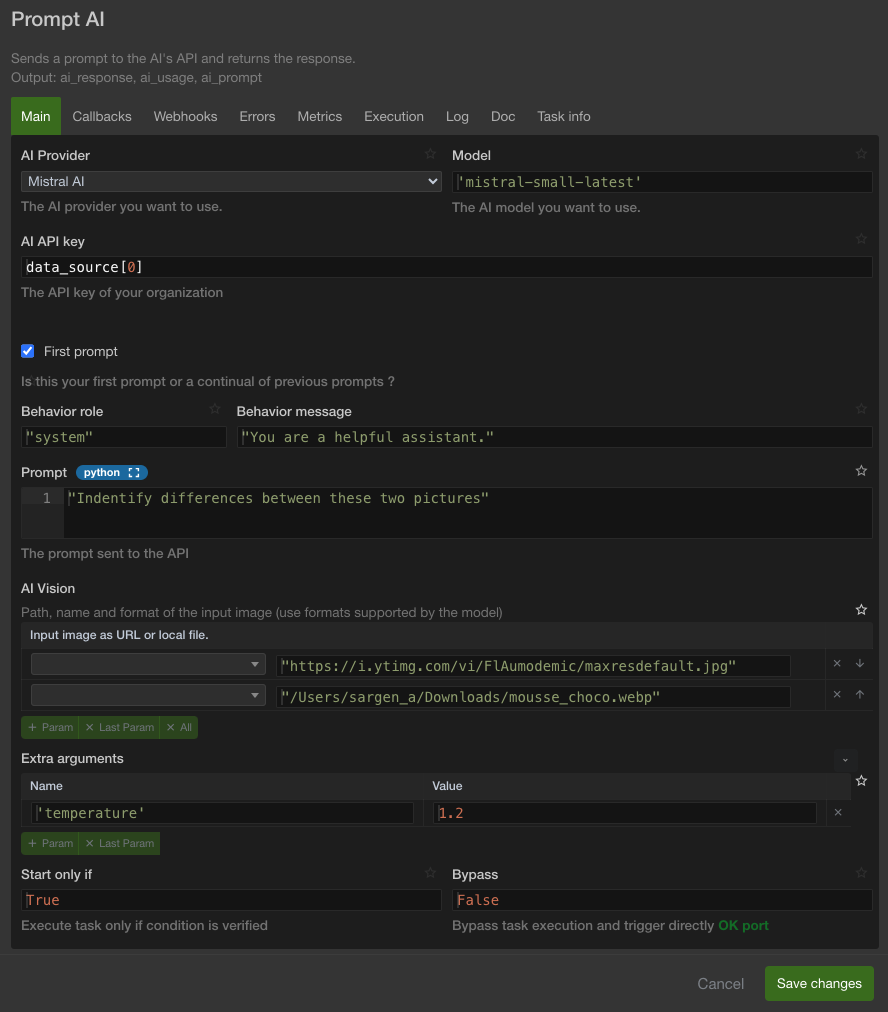

Prompt AI

"Prompt AI" is a task that lets you send a prompt to LLMs using official chat completion APIs. Note that those APIs are prone to change, so we designed the task to allow maximum user control. This way you can use all the parameters that you might need and adapt to changes.

This task outputs ai_response (the message response object), ai_usage (the token usage of your prompt), ai_prompt (your formatted prompt)

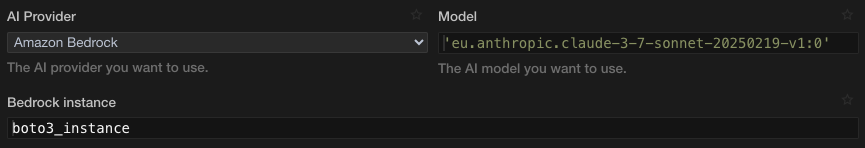

AI Provider

Here you can choose among supported providers :

OpenAI, Mistral AI, Anthropic, Deepseek and Amazon Bedrock

Model

Here you need to put the ID of the model you want to use. Here is a list of links to models depending on the provider:

OpenAI - Mistral AI - Anthropic - Deepseek - Amazon Bedrock base model IDs (on-demand throughput) - Amazon Bedrock Supported Regions and models for cross-region inference

AI API key

In this field you need to provide the API key given by your provider.

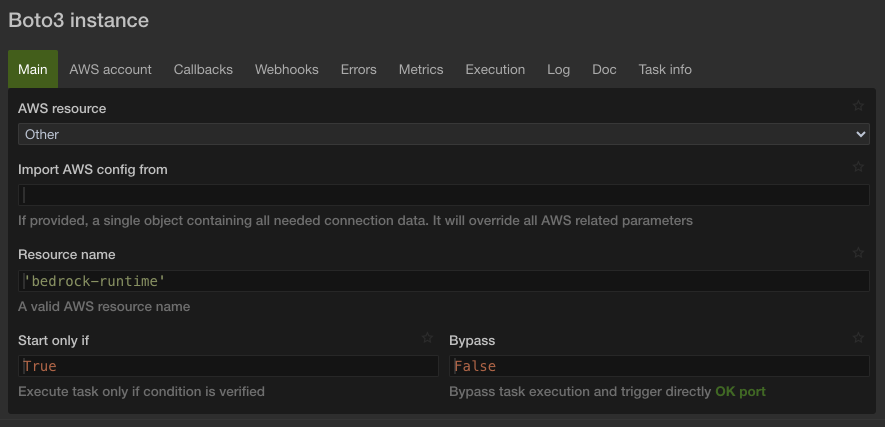

Bedrock instance

If you select Amazon Bedrock as your provider, the "AI API key" field will be replaced by the "Bedrock instance" field.

Here you need to use the boto3 task to provide a bedrock-runtime instance, like so :

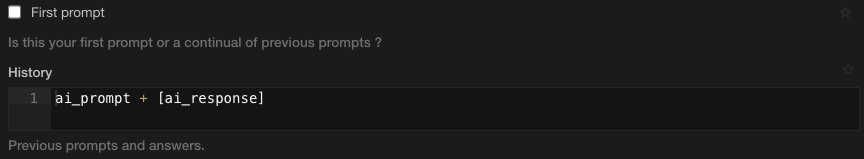

First prompt

If this is the beginning of a conversation with the model set this to True. This means that depending on the model and provider you will be able to use the "Behavior" fields. If you are continuing a conversation set this to False. You can use the "History" field to feed the task the previous conversation.

Behavior role

This field defines what is the name of the role behind the "Behavior message", it defaults to 'system' since it is the role used by a majority of providers and models. But for example this role was changed to 'developer' by OpenAI with their new o1 models, so check the documentation of the provider's API if you are encountering issues with the 'system'role.

Behavior message

Here you can send a message to the model that will determine its behavior. This field defaults to "You are a helpful assistant.". You can set it to None if you don't want to use the behavior prompt.

History

When "First prompt" is False you can insert your previous conversation here. This field defaults to ai_prompt + [ai_response] this way you can use the returned values of previous a "Prompt AI" task in your workflow.

AI Vision

Here you can use the vision capability of the models. You can either input public URLs or local files. You can input as many as the provider allows but keep in mind that images consume a lot of tokens.

Note : Amazon Bedrock only allows for files.

Extra arguments

The APIs for chat completion allow for a lot of different parameters, some are optional, some are mandatory depending on the providers API.

Links to the documentation of the different APIs can be found at the top of this page in the "AI Provider" section.

Note : For Amazon Bedrock the available params can be found here.