ChatGPT

⚠️ DEPRECATED !!! (Will be dropped by 5.0)

--> Use Prompt AI

This task lets you send a prompt to GPT-4 using the OpenAI API. The response time may vary with the size of your prompt and the availability of OpenAI's API. It is also possible that OpenAI's API experiences outages sometimes which will result in errors so keep that in mind when using this task in your workflows.

The ChatGPT response will be outputed as gpt_response.

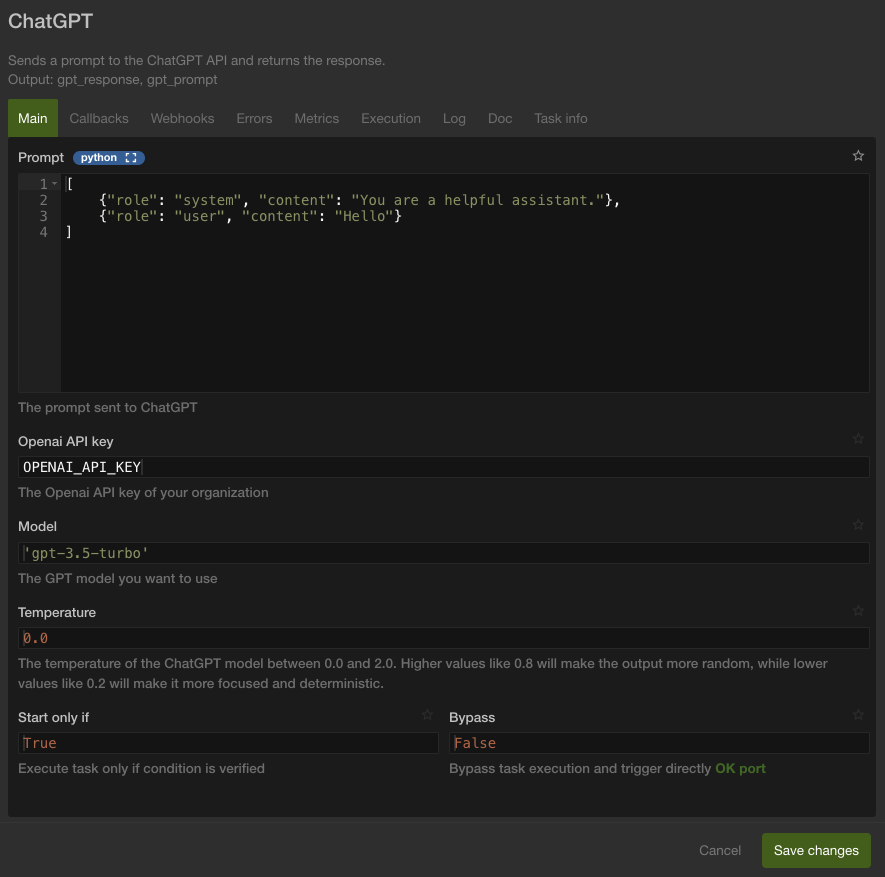

Prompt

This is the message that will be sent to ChatGPT, it needs to be a list.

The system role is only used once, at the beginning, it serves to give guidance to ChatGPT on how to behave.

The user role means that the message is from you.

The assistant role means that the message is from ChatGPT.

Your prompt always need to be formatted this way, you can put everything you want in the content value as long as it's a string.

Since we are using the gpt-3.5 turbo model you can only use 16,385 tokens at a time which includes the tokens used in the response of chatGPT, this amount of tokens should largely be enough but if you are unsure of the amount of tokens in your prompt you can always test it here.

OpenAI API key

This is where you need to put the OpenAI API key of your organization which can be created here.

It needs to stay secret so don't hesitate to use a data source to store and use this key safely.

Model

What model you want to use for your request, you can find all the GPT models here, only use models compatible with the Chat Completions API.

Temperature

What sampling temperature to use, between 0 and 2, it needs to be a float. The closer it is to 2 the more random will the response be.

Output variables

Output context will contain gpt_response and gpt_prompt on success. gpt_response is the message found in the response object of ChatGPT and gpt_promptis the prompt field you sent in case you want to reuse it for another prompt to continue the conversation in a following ChatGPT task prompt, like this :

gpt_prompt + [gpt_response] + [{"role": "user", "content": "Today is a beautiful sunny day"}]